Anyway, back to this post. We know Plexxi is using advanced algorithms and affinities to properly distribute traffic and apply policy on *their* network fabric. This is interesting and I really like this, but how can the industry take advantage of affinities on a larger scale leveraging traditional leaf/spine and virtual network architectures and not necessarily on Plexxi switches? If you haven’t realized already affinities and the way Plexxi distributes traffic is anti-ECMP and anti-hashing (think about port-channel traffic distribution) --- did you read the reference links on my previous post at the bottom :)? Doesn’t it suck to have to use some random hash to determine which packets go where?

This brings us to an important question --- where else do affinities make sense?

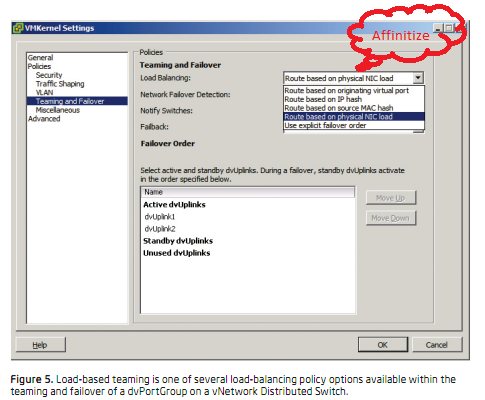

First, think about every location there are multiple paths available. In networking, there will always be multiple paths for redundancy, which is a plus for leveraging affinities, but there are usually LOTS of links during periods of transition and as bandwidth is added. Servers that have 1G connections may connect to a 1G Top of Rack (TOR) switch with up to 16 NICs. How crazy is that? For these NICs, there may be a port-channel configured if the server team doesn't mind talking to the network team. For server teams that want to take it into their own hands and not talk with the network team, they usually look at what polices are available in the built-in vswitch that will provide network redundancy and failover. These options, or simple algorithms, may vary, but very common ones are forms of source MAC/port ID-pinning and Load Based Teaming. With the former, switches will pin all traffic from a particular source MAC or source vswitch port to a particular uplink (that connects to the TOR), and for the latter, traffic will be distributed across the uplinks (server NICs) based on sheer load. The load could be checked every X seconds and then re-distributed. See the screen shot below from vCenter on the options available for a VMware virtual switch.

Leaf to Spine

Without going into tons of detail, you can possibly already see where I am going with this. 10GbE is everywhere today, and from a leaf/spine perspective, we are already in the transition from 10GbE to 40/100GbE in the spine/core of the network. That said, many TOR switches have 4 x 40GbE interfaces that also operate as 16 x 10GbE interfaces. This means a single TOR switch could have 16 uplinks going into the Spine that get spread across 2 or even 16 spine switches. Today, ECMP or port-channel hashing algorithms would be used. Tomorrow, maybe affinities can be used? Do you see benefit in that?

What do I think?

There is much more to Plexxi than cool hardware. Their controller, but rather algorithmic intelligence, is pretty damn important. I personally think it’s more important. I would love to see them explore what can be done with Open vSwitch and their controller. They could even add to the user space daemon of OVS, ovs-vswitchd, or replace it and make their own like other start-ups are doing, since it’s open source after all. And since you can run OVS on certain hardware switches, this would make Plexxi relevant across a wider range of customers and across the complete network stack including physical and virtual ---from OVS only deployments, ODM switches running OVS, and of course, Plexxi native switches with the optical inter-connect. Call me crazy, but it sure sounds interesting to me.

Thanks,

Jason

Twitter: @jedelman8

RSS Feed

RSS Feed